Overview

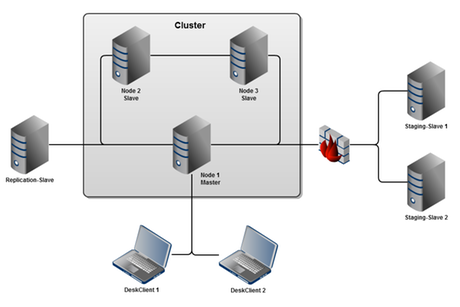

The Sophora Server can be operated as a Sophora cluster.

A cluster consists of several Sophora Servers connected to each other. Each cluster node operates in one of two modes: master or slave.

The Sophora Primary Server replicates all of its data to the Sophora Replica Server nodes of the cluster.

The Sophora Clients (e.g. Staging Servers and DeskClients) only connect to the Sophora Primary Server node of the cluster.

The Sophora Primary Server can easily be moved to another node of the cluster.

The previous Sophora Primary Server node will then switch to "slave" mode. The move is transparent to connected Sophora Clients, they will automatically reconnect to the new Sophora Primary Server.

Each Sophora Client keeps a list of known cluster nodes; on startup, the Sophora Client connects to each node until it finds the Sophora Primary Server.

No persistent queues

In former versions for every Sophora Replica Server connected to a Sophora Primary Server, a persistent JMS queue was created. When the Sophora Replica Server was down, all changes in the Sophora Primary Server, were stored in this queue.

When the Sophora Replica Server was restarted all changes were transmitted to the Sophora Replica Server.

Since version 1.38 there are no persistent queues. Sophora Replica Servers can dynamically connect to and disconnect from the master.

The configuration properties sophora.replication.master.queueNames and sophora.replication.slave.queueName are obsolete.

When a Sophora Replica Server reconnects to a Sophora Primary Server, it synchronizes its content in a similar way as the Sophorea Staging Servers do.

Based on the last modification date of documents in the repository in the Sophora Replica Server, a sync-request is sent to the Sophora Primary Server.

Then, the Sophora Primary Server will send all changed documents and structure nodes to the Sophora Server.

For documents, all missing versions are transmitted to the Sophora Replica Server. All other data (node type configurations, users, roles, etc.) are send to the Sophora Replica Server, too.

Getting started

To start a Sophora Cluster, at least two Sophora Servers in cluster mode are needed. In every cluster node a JMS broker is running. Therefore, the configuration differs from the configuration of a normal Sophora Server:

Cluster Node 1

sophora.home=...

sophora.rmi.servicePort=1198

sophora.rmi.registryPort=1199

sophora.replication.mode=cluster

sophora.local.jmsbroker.host=hostname1

sophora.local.jmsbroker.port=1197

sophora.remote.jmsbroker.host=hostname2

sophora.remote.jmsbroker.port=1297

sophora.replication.slaveHostname=hostname1Cluster Node 2

sophora.home=...

sophora.rmi.servicePort=1298

sophora.rmi.registryPort=1299

sophora.replication.mode=cluster

sophora.local.jmsbroker.host=hostname2

sophora.local.jmsbroker.port=1297

sophora.remote.jmsbroker.host=hostname1

sophora.remote.jmsbroker.port=1197

sophora.replication.slaveHostname=hostname2The configuration of the remote JMS broker (sophora.remote.jmsbroker.*) must direct to one of the other cluster nodes. It is used to initially find the Sophora Primary Server. It is not required that the remote server is always the Sophora Primary Server.

clusterMode. This property may be specified via the command line by starting the Sophora Server: ./sophora.sh -start -clusterMode masterValid values for the system property are:

- master

- slave

- open (The Sophora Server which starts first becomes the Sophora Primary Server)

When a cluster with two or more nodes is running, the list of cluster nodes are exchanged and stored in the repository of every connected Sophora Server.

After a restart of one of the Sophora Servers this list is used to find the Sophora Primary Server. All possible Sophora Servers from this list are connected and checked.

When a remote Sophora Server from this list is not running or is not in Sophora Primary Server mode, the next Sophora Server from the list is checked.

The list is accessible via JMX. Entries of Sophora Staging Servers are removed, when the respective Staging Server is stopped. Other nodes are stored permanently.

When a Sophora Server leaves the cluster permanently, the Sophora Server can be removed from the list via JMX. When this is done on the current Sophora Primary Server, this information is transmitted to all currently connected Sophora Servers.

When a cluster node is started it tries to find the other cluster nodes:

- The configured remote JMS broker is asked if it is running as Sophora Primary Server.

- If the configured remote JMS broker is not running as Sophora Primary Server or the JMS broker is not reachable, all other known JMS brokers (stored in the repository) are tried.

- If none of the other servers is running as Sophora Primary Server, the currently started server becomes the Sophora Primary Server. Otherwise the Sophora Server starts as a Sophora Replica Server and synchronizes its content with the Sophora Primary Server.

Server-ID

Every server has a unique server id. This id is stored in a file <sophora.home>/data/server.properties.

When copying the home directory to create a new Sophora Replica Server, it is important to not copy this file. The current Sophora Server ID can be checked via JMX.

Clients connecting to a cluster node

For clients it is only possible to connect to the current Sophora Primary Server, (or a Sophora Staging Server)

For users with the system permission 'administration' it is possible to connect to a Sophora Replica Server by using a special extension to the connection URL. By adding '?force=true' it is possible to connect a Sophora Client (DeskClient or other clients like the Importer) to any Sophora Server independent from its current state.

http://slave.sophora.com:1196/?force=trueWhen a client is connecting with the force flag, it will never switch automatically to a different Sophora Server.

When a Sophora Client tries to connect to a Sophora Replica Server, this situation is detected and the client is redirected to the ophora Primary (Master) Server, automatically.

In a situation where the Sophora Replica Server is running and connected to the cluster, the list of all Sophora Servers is sent to the Sophora Client and used for finding the right Sophora Primary Server.

When a client tries to connect to a Sophora Replica Server which is currently not running, a different approach is used.

Every time a client successfully connects to a Sophora Cluster, the list of all cluster nodes is sent to the Sophora Client and stored persistently in the file system.

Later, when a Sophora Client tries to re-connect to the cluster and the configured Sophora Server is not reachable, the list of Sophora Servers is considered to find the current Sophora Primary Server.

The directory is configured in all clients via the property sophora.client.dataDir. The DeskClient saves this file without any further configuration in its workspace.

Switching the master

In a cluster the Sophora Primary Server can switch to another Sophora Server.

Currently, this switch can be done only manually. No automatic switch is possible. Even when the current Sophora Primary Server is stopped, no new Sophora Primary Server is elected automatically.

The switch can be started via JMX on any cluster node. A SwitchMasterRequest is sent to all Sophora Servers and a ClientReconnectRequest is sent to all Sophora Clients which are currently connected to the old Sophora Primary Server.

The new Sophora Primary Server is available immediately. All other cluster nodes (including the old Sophora Primary Server) connect to the new Sophora Primary Server and synchronize their content.

All clients log out off the old Sophora Primary Server and re-login to the new Sophora Primary Server automatically. No further operation is needed.

All operations in the clients are blocked (at the ISophoraContent interface) while the Sophora Client is connecting to the new Sophora Primary Server. When the Sophora Client is connected to the new Sophora Primary Server the blocked operations continue.

ReadAnywhere cluster configuration

A Sophora Cluster can operate in a ReadAnywhere mode.

In this setting read-only requests from clients are distributed to Sophora Replica Servers over the cluster.

This approach reduces the number of requests, which are directed to the Sophora Primary Server and reduces therefore the load of the Sophora Primary Server and increases it's performance.

The ReadAnywhere mode has to be enabled globally in a configuration document. Alternatively it can be enabled by a connecting Sophora Client with the help of an additional connection URL parameter.

The additional connection parameter may be used to test the ReadAnywhere cluster, while the configuration parameter in the configuration document enables the ReadAnywhere cluster for all connected Sophora Clients.

At which the benefits of the ReadAnywhere cluster come at first fully into effect when all connecting Sophora Clients are using it.

The configuration parameter in the configuration document is the following one:

sophora.client.connection.isReadAnywhere=true

With the additional connection parameter ?readanywhere=true is the ReadAnywhere cluster functionality enabled just for one Sophora Client. Take the following connection URL as an example:

http://localhost:1196?readanywhere=true

Exclusion of Servers from a ReadAnywhere cluster

There may be servers in a Sophora Cluster which should not be used in a ReadAnywhere cluster.

For example, Sophora Servers running on weaker hardware or servers which are only used for backup purposes.

The Sophora Servers can be excluded from a ReadAnywere cluster with the following configuration parameter (in the sophora.properties file):

sophora.cluster.readAnywhere.available=false

These servers act as normal Sophora Replica Server, but no requests are directed to them. The default value of this parameter is true.

This configuration parameter is also available over the JMX MBean ContentManager. There is also an operation toggleReadAnywhereStatus() to toggle the value.

Cluster for Sophora Delivery Web Application

In normal setups every Sophora Delivery Web Application is connected to its own Sophora Staging Server, which serves the data only for this delivery.

The connection is configured in the sophora.properties of the web application and can only be changed after a restart:

sophora.serviceUrl=rmi://localhost:1199/ContentManager

sophora.delivery.client.username=webadmin

sophora.delivery.client.password=adminThe Sophora Cluster adds a more flexible connection between Sophora Staging Servers and web applications. It allows two new features:

- It is possible to manually change the connection to the Sophora Staging Server.

- The web application can automatically change the connection, when the current Sophora Staging Server is no longer available.

Manually changing the connection to another Staging Server

The JMX interface of the web application offers a new section "com.subshell.sophora:Type=Server" which lists all available Sophora Staging Servers.

When the Sophora Primary Server of the Sophora system runs in cluster mode and the web application is currently connected to a Sophora Staging Server, every JMX entry offers a connectDeliveryToThisServer operation.

Automatically switching to another Sophora Staging Server

The automatic switching of a Sophora web application to a different Sophora Staging Server requires two new properties in the sophora.properties:

sophora.delivery.client.autoReconnectEnabled=true

sophora.delivery.client.autoReconnectTo=staging1.customer.com:1199,staging2.customer.com:1199The first property activates the feature. The second property lists the service urls for alternative Staging Servers. These connections are additional to the primary connection (sophora.serviceUrl) which still exists. For all connections the same combination of user name and password is used.

When the current Staging Server is not available the list of alternative Staging Servers is tested in the configured order. The first available Sophora Staging Server is choosen and a new connection to this Sophora Staging Server is established.

After an automatical switch (in contrast to a manual switch) the web application tries to switch back to the preferred Sophora Staging Server (configured by sophora.serviceUrl).

Every 60 seconds the preferred Staging Server is probed and if it is available, the web application switches back.